The Power of Pro-active Monitoring: Why Data Observability and Data Quality Matter

March 6th, 2023 WRITTEN BY Vidula Kalsekar - Manager, Client Success Tags: Data, data management, data quality, Industry-agnostic

Written By Vidula Kalsekar, Manager, Client Success

Data is one of the most significant assets for any organization and those who are able to effectively collect, analyze, and make data-driven decisions stand to have a significant advantage over their competitors. Therefore, trusting that data is paramount to success.

Gartner predicts that by 2025, 60% of data quality monitoring processes will be autonomously embedded and integrated into critical business workflows. Even with all the advanced technologies around, currently, this process is still 50-70% manual, as it follows a reactive approach. It solely depends on Data Subject Matter Experts (SMEs)/Stewards; so instead of focusing 100% on data insights, the bulk of their time goes into constant sampling, profiling, and adding new data monitoring rules to ensure the data is accurate, complete, consistent, and unique. To determine the health of the systems, these types of data monitoring necessitate data SMEs tracking pre-defined metrics; which essentially means, they must know what issues they are looking for and what information to track first. With this reactive approach, only 25-40% of Data Quality (DQ) problems get identified before they create a trickle-down impact. Hence, organizations need a proactive data health monitoring approach where data observability on top of data quality will come into play.

Bringing Data Quality and Observability together, here’s the ultimate solution to achieving healthy data:

Even though data observability is built on the concept of data quality, it goes beyond that by not just describing the problem but by explaining (even resolving it) and preventing it from recurring in the future.

Data-driven organizations should focus on the following five pillars to provide real-time insights into data quality and reliability, along with the traditional data quality dimensions:

- Freshness: Check how current the data is and how often the data is updated

- Distribution: Check if the data values fall within an acceptable range; reject or alert if not

- Volume: Check if the data is complete and consistent; identify the root cause if not and provide recommendations

- Schema: Track changes in data organization that give real-time updates of changes made by multiple contributors

- Lineage: Record and document the entire flow of data from initial sources to end consumption

By observing these five pillars, data SMEs (Subject Matter Experts) can gain new insights into how data interacts with different tools and moves around their IT infrastructure.

This will help find issues and/or improvements that were not anticipated, resulting in a faster mean time to detection (MTTD) and mean time to resolution (MTTR). However, this is easier said than done. This is because the current technology landscape does not have many tools that can proactively observe data based on these five pillars.

The Future of Data Quality is to be proactive.

Pro–active monitoring is a key component to gaining more value from data. By proactively monitoring observability and quality, organizations can identify issues quickly and resolve them before they become major problems. This will also help in understanding the data better, resulting in better decision-making and improved customer experiences.

This is where Fresh Gravity’s DOMaQ tool holds its niche in enabling the business as well as technical users to identify, analyze, and resolve data quality and data observability issues. The DOMaQ tool uses a mature AI-driven prediction engine.

Fresh Gravity’s DOMaQ Tool

Fresh Gravity’s DOMaQ (Data Observability, Monitoring, and Data Quality Engine) enables business users, data analysts, data engineers, and data architects to detect, predict, prevent, and resolve issues, sometimes in an automated fashion, that would otherwise break production analytics and AI. It takes the load off the enterprise data team by ensuring that the data is constantly monitored, data anomalies are automatically detected, and future data issues are proactively predicted without any manual intervention. This comprehensive data observability, monitoring, and data quality tool is built to ensure optimum scalability and uses AI/ML algorithms extensively for accuracy and efficiency. DOMaQ proves to be a game-changer when used in conjunction with an enterprise’s data management projects (MDM, Data Lake, and Data Warehouse Implementations).

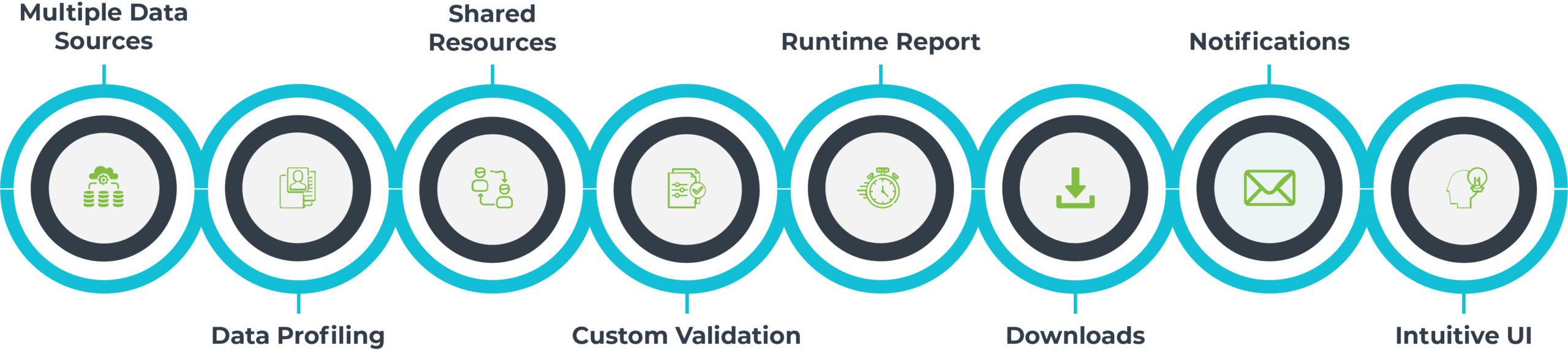

Key Features of Fresh Gravity’s DOMaQ tool:

- Connects, scans, and inspects data from a wide range of sources

- Saves 50-70% of arduous manual labor through auto-profiling

- Automates data quality controls by using machine learning to explain the root cause of the problem and predicts new monitoring rules based on evolving data patterns and sources

- Comes with 100+ data quality/validation rules to monitor the consistency, completeness, correctness, and uniqueness of data periodically or constantly

- Helps in preventing trickle-down impact by generating alerts when data quality deteriorates

- Supports collaborative workflows. Users can keep their work in a segregated manner or can share among the team for review/reusability

- Allows users to generate reports, build data quality KPIs, and share data health status across the organization

The future of data quality with DOMaQ is magical since this AI-driven proactive monitoring will enable businesses and IT to work together on the data from inception to consumption and will ensure that the “data can be trusted.”

To learn more about the tool, click here.

If you’d like a demo, please write to vidula.kalsekar@freshgravity.com or soumen.chakraborty@freshgravity.com.

.png)