Execution Is the New Strategy: What Top Leaders at Fresh Gravity Are Doing Differently

December 23rd, 2025 WRITTEN BY FGadmin

Written by Sonali Kulkarni, Sr. Manager, People & Talent

In leadership conversations, there is a long-standing belief that senior leaders should focus on strategy — the what — while delegating the how to their teams. Vision, direction, and resource allocation are considered “top-of-the-house” priorities, while operational workflows are left to managers and frontline employees.

But at Fresh Gravity, we have a different approach. The success of Fresh Gravity is led by executives and directors who are deeply engaged in how work actually gets done — not by micromanaging, but by shaping the systems, practices, and behaviors that drive everyday execution.

This leadership style, often termed “hands-on leadership,” has played a pivotal role in our sustained success.

So, what sets hands-on leaders apart?

- Focusis relentlessly onwhat clients value

Our hands-on leaders define success using metrics that matter to clients — not just internal dashboards. They ensure their units/teams deliver on what clients truly care about: speed, quality, consistency, value. This sharpens priorities and embeds an external focus into daily decision-making.

- They Architect How Work Gets Done

Rather than treating processes as tactical details, our leaders actively design and refine workflows, structures, and decision pathways. They create systems that empower teams to work efficiently and autonomously, ensuring that excellence is baked into how Fresh Gravity operates.

- They Use Experiments and Data to Drive Decisions

Hierarchy takes a back seat. Instead of relying on authority or intuition, our hands-on leaders encourage experimentation, measurement, and iteration. They create a culture where data guides decisions and failures become opportunities for continuous learning and improvement.

- They Lead by Teaching, Not Just Directing

Instead of issuing instructions from the sidelines, our hands-on leaders roll up their sleeves and teach teams the tools, methods, and principles required to execute at a high standard. Their leadership is rooted in mentorship — showing people how to do things better, not simply telling them to.

- They Build a Habit of Continuous Improvement

For our leaders, improvement is not a once-a-year initiative — it is a daily discipline. They champion a culture of “better, faster, and scalable” by driving small, consistent enhancements that, over time, create a compounding advantage competitors find difficult to match.

I can confidently say this approach truly works for Fresh Gravity. Hands-on leaders ensure that great strategies do not get lost in execution. By staying close to the work, they remove friction, clarify priorities, and address problems early. Clear systems and shared expectations empower teams to make confident, consistent decisions. A culture of experimentation accelerates learning and enables the organization to evolve more effectively. And when excellence is built into systems—not reliant on individuals—the organization continues to thrive, regardless of leadership changes.

What This Means for Today’s Leaders

For leaders in any function — including HR, operations, engineering, technology, and business — hands-on leadership provides a powerful blueprint:

- Be curious about how work gets done.

- Help teams build better systems instead of fixing problems reactively!

- Turn coaching into a daily habit.

- Use data and experimentation to steer decisions.

- Promote continuous improvement over one-time transformation programs.

Above all, hands-on leadership is not about control. It is about enabling excellence — thoughtfully, intentionally, and consistently.

In a world where execution differentiates winners from the rest, leaders who understand and shape the how are poised to build organizations that adapt faster, deliver better, and outperform for the long term.

A Unified Graph Approach for the Next Era of Intelligent Systems

December 17th, 2025 WRITTEN BY FGadmin Tags: enterprise AI, hybrid architecture, intelligent systems, unified graph approach

Written by Soumen Chakraborty, Vice President, Artificial Intelligence

During a recent Life Sciences data modernization workshop, a VP paused and asked:

“Should we use RDF or a Property Graph? Which one works better for AI and agentic workflows?”

We hear this question all the time.

But the real issue is not choosing one over the other — it’s the assumption that they serve the same purpose.

- RDF and LPG solve fundamentally different problems

- AI behaves differently with each

- No production-grade AI system uses either in isolation

After delivering ontology-driven extraction, lineage systems, record-level DQ agents, and protocol-mining pipelines, one truth is clear:

Modern enterprise AI requires a hybrid of RDF, LPG, ontologies/schemas, and vector grounding.

This blog clarifies where each fits — with real examples, not theory.

-

The Two Graph Families in Practice

RDF (Resource Description Framework)

RDF is the semantic layer — designed for meaning, rules, and governance. It represents knowledge as triples:

(Study123 — hasTrialPhase — Phase3)

RDF excels when enterprises need:

-

- Standard terminology

- Regulatory clarity

- Precise definitions

- Machine-understandable meaning

- Validation via SHACL

- Explainability

Where Fresh Gravity Used RDF: Protocol Mining & Document Digitizer

For a Life Sciences client, our ontology (RDF + OWL) is defined:

-

- What a Protocol, Arm, Cohort, Endpoint, and Procedure actually mean

-

- How do the Study Design elements relate

-

- Which metadata fields are mandatory

-

- How extracted entities must be validated

RDF did not extract text on its own — schemas, and AI did that. But RDF ensured the extracted entities were:

-

- Consistent

- Valid

- Semantically aligned

- Explainable

RDF gave us the meaning. AI + schema gave us close to 100% accurate extraction.

LPG (Labelled Property Graph)

LPG is the operational layer — optimized for:

-

- High-speed traversal

- Relationship intelligence

- Pattern discovery

- Graph analytics

- Fraud-like behavior

- Identity linking

Nodes and edges can carry properties:

(Patient456 {age:72}) —[filedClaim]-> (Claim9823)

Where Fresh Gravity Used LPG: Identity Resolution & Record-Level Lineage

Identity Resolution

For a data stewardship program, LPG enabled us to:

-

- Detect clusters of duplicate patients

- Traverse millions of edges

- Identify hidden connections in seconds

Record-Level Identity Lineage

For another client, LPG allowed us to:

-

- Trace every “data hop” or transformation

- Explore lineage metadata across dozens of pipelines

- Uncover indirect dependencies

- Pinpoint the exact upstream sources affecting a record

This level of traversal is complicated with RDF, but LPG handled it effortlessly.

-

Important Reality Check: Graphs Alone Don’t Stop Hallucination or Improve Extraction

Some AI blogs (- even GPT models) claim:

“Use an ontology/graph and hallucinations disappear.”

That is not true in practice (at least for the current generation of models as of this writing).

Across our implementations, three facts consistently hold:

Fact 1 — Schema-driven extraction is more reliable than ‘only’ ontology/graph-driven extraction

LLMs respond far better to:

-

- Structured JSON schemas

- Output templates

- Examples

- Field definitions

Ontologies enrich extraction but rarely improve raw extraction accuracy by themselves.

Document Digitizer uses:

-

- Schemas → for extraction

-

- RDF/OWL → for classification, consistency, validation

Fact 2 — Vector databases (VDB) are essential for grounding

LLMs do not efficiently query ontologies or graphs directly.

We rely on:

-

- Vector embeddings

- Hybrid retrieval

- Chunk-level semantic search

- Ontology-backed disambiguation

VDB ≠ RDF ≠ LPG — each solves a different grounding need.

Fact 3 — AI needs a multi-layered approach

A mature enterprise AI stack uses:

-

- Schemas → structure

- Ontologies (RDF/OWL) → meaning

- LPG → relationship intelligence

- Vector DB → grounding

- Agents & Tools (with LLMs/SLMs) → orchestration

Graphs are powerful — but only part of the system.

-

When Should You Use What?

Use RDF when you need:

-

- Semantic consistency

- Domain meaning

- Controlled vocabularies

- Lineage semantics

- Rule validation (SHACL)

- Regulatory auditability

Example:

Validating Clinical Trial metadata.

Use LPG when you need:

-

- Fast traversal

- Relationship patterns

- Fraud-like detection

- Dynamic 360 views

- Deduplication & linking

- Impact exploration at scale

Example:

Detecting duplicate patient entities across millions of rows.

Use BOTH when you need:

-

- AI-ready hybrid Knowledge Base, semantic + operational lineage

Example:

RDF (via OWL) defined:

- AI-ready hybrid Knowledge Base, semantic + operational lineage

“A Form must belong to one Submission.”

“A Submission must reference ≥ 1 Report.”

LPG answered real questions:

“If variable X changes, which forms break?”

“Which downstream submissions are impacted?”

“Which datasets feed those forms?”

-

- End-to-end explainability

- Scalable data quality and data governance workflows

- Mapping + rule enforcement + traversal

Which, honestly… is what every enterprise wants today.

-

The Future: Agentic AI Needs Meaning + Relationships + Retrieval

AI agents operating on enterprise data need to:

-

- Enforce semantic rules (RDF + SHACL)

- Understand domain meaning (OWL)

- Analyze relationships efficiently (LPG)

- Detect anomalies & duplicates (LPG)

- Retrieve grounding context (Vector DB)

- Maintain traceability (RDF + lineage)

Every successful FG delivery has followed this pattern:

-

- RDF for meaning

- LPG for relationship intelligence

- Schemas for extraction

- Vectors for grounding

- AI/LLMs for reasoning

- Agents for automation

This is what modern enterprise AI actually looks like.

Final Thoughts

If your team is debating:

“RDF vs LPG — which one should we choose?”

You’re asking the wrong question.

The real question is:

“Where do we need semantic meaning and constraint-based reasoning?

Where do we need fast traversal and operational insight?

And how will our AI agents orchestrate schemas, vectors, RDF, and LPG together?”

At Fresh Gravity, we stopped guessing.

We built. We delivered. What consistently works is a hybrid, purpose-driven architecture for enterprise AI.

Listening to Empower: The Fresh Gravity Way

November 17th, 2025 WRITTEN BY FGadmin Tags: employee centric, Employee engagement, our work culture

Written by Nital Sawde, Sr. Specialist, People & Talent

At Fresh Gravity, we believe that our people are at the heart of everything we do. Every innovation, every idea, and every achievement begins with the passion and commitment of our team members. Yet, one of the most powerful contributors to Fresh Gravity’s success isn’t just what we communicate to our people or ask them to do, but it’s how deeply we listen to them.

Our culture is such that we strongly believe that a happy team leads to happy clients.

Hence, we ensure to gather feedback from employees. It is more than collecting opinions; it’s about understanding experiences, building trust, and creating an environment where everyone feels valued and heard.

The feedback we gather serves as a bridge between leadership and employees, helping us see the organization through the eyes of those who live its values every day. At Fresh Gravity, we encourage open and honest dialogue — whether through surveys, one-on-one connects, or informal conversations — because listening is the first step toward learning and improving.

We believe feedback fuels both engagement and satisfaction. While closely connected, these two come from distinct experiences:

Employee satisfaction reflects how content employees feel with their role, work environment, and leadership support.

Employee engagement goes further; it’s about how emotionally and intellectually invested they are in contributing to the company’s success.

When we act on feedback — by improving policies, enhancing communication, or refining processes — employees see that their voices lead to real change. This not only boosts satisfaction but also cultivates a strong sense of ownership, pride, and empowerment.

Key Stages Where Employee Feedback Is Gathered

-

Onboarding Stage

Purpose: To understand the new joiner’s initial experience and identify onboarding gaps.

Focus Areas:

-

- Clarity of role and responsibilities

-

- Support from the onboarding team

-

- Access to tools, resources, and information

-

- First impressions of company culture

-

Probation Feedback

Purpose: To capture the early journey experience and ensure alignment of expectations.

Focus Areas:

-

- Quality of induction and training

-

- Role clarity and workload

-

- Manager and team support

-

- Integration into company culture

-

- Inclusiveness in the project and the project team

-

Performance Review Stage

Purpose: To facilitate two-way feedback between managers and employees during goal setting and appraisal cycles.

Focus Areas:

-

- Goal clarity and performance expectations

-

- Opportunities for skill development

-

- Manager support and recognition

-

- Suggestions for team or process improvement

-

Engagement Surveys

Purpose: To measure overall satisfaction and engagement across the organization.

Focus Areas:

-

- Workplace culture and communication

-

- Leadership effectiveness

-

- Career growth and learning opportunities

-

- Work-life balance and well-being

-

Leaders as Listeners

Purpose: To create space for open dialogue.

At Fresh Gravity, our leaders are active listeners. Through our “Leadership Connects” sessions, they meet with small groups of employees every quarter to understand their challenges, gather improvement ideas, and discuss these insights in Executive Meetings to shape future strategies.

This kind of leadership strengthens relationships, enhances collaboration, and inspires people to bring their best selves to work every day.

-

Exit Interviews

Purpose: To gather honest feedback from departing employees to identify trends that may impact retention.

Focus Areas:

-

- Reasons for leaving

-

- Manager and leadership experience

-

- Work culture and team dynamics

-

- Suggestions for improvement

-

Ad-hoc/Open Feedback Channels

Purpose: To encourage continuous dialogue beyond formal feedback cycles.

Examples:

-

- HR connects, skip-level meetings, or pulse check-ins

-

- Anonymous suggestion boxes or digital feedback tools

-

- One-on-one discussions with managers

Conclusion

At Fresh Gravity, feedback isn’t a one-time activity — it’s an ongoing conversation. By valuing every voice, we continue to shape a workplace where people feel empowered, heard, respected, and inspired to grow.

Because when we listen to our people, we don’t just improve processes — we strengthen our culture.

And that’s what keeps Fresh Gravity moving forward — together.

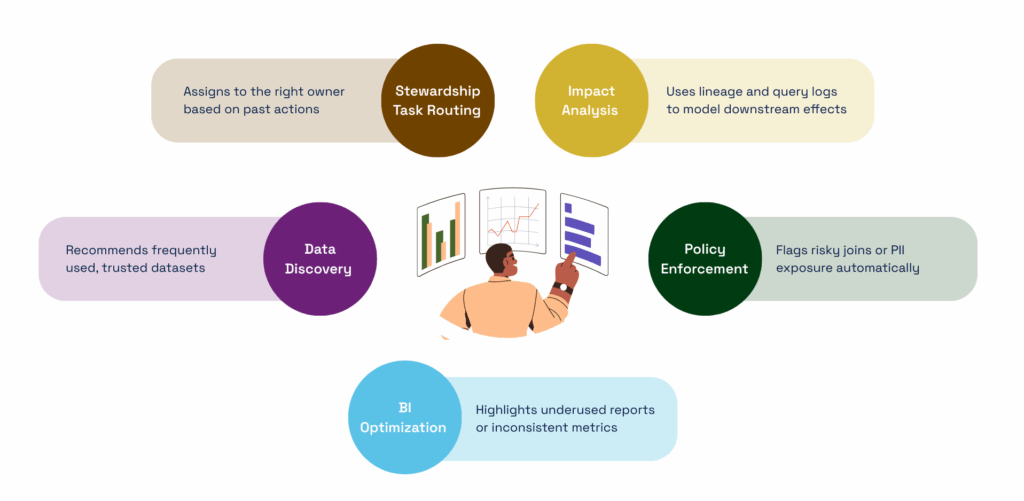

Transparent Policy Centers: Building Trust Through Governed Data Access

October 28th, 2025 WRITTEN BY FGadmin Tags: data governance, transparent policy center, unify data policies

Written by Neha Sharma, Sr. Manager, Data Management

In today’s data-driven landscape, governance without transparency is like navigation without a map. As organizations strive to strike a balance between agility and control, Transparent Policy Centers are emerging as a powerful framework to unify data policies, enforce governance, and empower business users simultaneously.

But what does transparency in policy governance really look like? And how can we make it actionable across modern data stacks?

What Is a Transparent Policy Center?

A Transparent Policy Center is a centralized, accessible interface that allows teams to:

- Document and publish data policies (access, classification, retention, etc.)

- Translate policies into business-friendly language

- Connect policies to actual data assets, users, and systems

- Track adherence, violations, and policy-related activity

The goal? Make governance understandable, actionable, and trusted — not just enforced.

Why Organizations Are Investing in It

Traditional policy management often falls short:

- Access controls are opaque — users don’t know why access is denied

- Policies are scattered across PDFs or SharePoint folders

- Manual enforcement creates bottlenecks and inconsistencies

- Audits become stressful fire drills instead of smooth validations

A Transparent Policy Center shifts governance from a backstage function to a visible, user-aware service.

Core Capabilities of a Transparent Policy Center

Whether custom-built or powered by a governance platform, a well-designed Policy Center should offer:

- Central Policy Catalog

- Consolidates all policies (e.g., data usage, privacy, classification)

- Organized by domain, region, or compliance framework

- Business-Friendly Policy Views

- Explains rules in non-technical language for analysts and stakeholders

- Highlights the “why” and “how” behind each rule

- Policy-to-Data Mapping

- Shows which tables, dashboards, or APIs are governed by each policy

- Visualizes policy impact using lineage and usage metadata

- Live Enforcement Integration

- Embeds with access control tools, data catalogs, and pipeline orchestration tools

- Captures real-time violations or policy conflicts

- Access Request & Escalation Workflow

- Users can request access with full visibility into approval paths

- Tracks status, SLAs, and owner accountability

Who Benefits from Transparent Policy Centers?

Stakeholder |

Benefits |

| Data Consumers | Understand access rules before hitting blockers |

| Stewards | Apply governance with clarity and context |

| Compliance Teams | Gain visibility into policy status and adherence |

| Executives | See governance metrics that support risk and trust initiatives |

How to Build a Transparent Policy Center

You don’t need to start from scratch — most modern data stacks already have the building blocks. Key enablers include:

- A metadata management platform (catalog or fabric)

- Policy modeling and documentation templates

- Lineage, classification, and usage tracking tools

- UI/UX layer that surfaces policies at point-of-use (e.g., within BI tools or data request portals)

- Integration with access control and ticketing systems (e.g., identity providers, JIRA, ServiceNow)

Example Use Cases

Transparent Policy Centers become invaluable across industries:

- Healthcare – Clarify HIPAA-based access to patient data

- Banking – Display BCBS 239-aligned policies on risk data

- Retail – Govern product metadata usage across systems

- Global Enterprises – Show GDPR/CCPA impact on customer attributes

Looking Ahead: Transparent, Trusted, and Autonomous

As AI begins to assist in governance, transparency becomes even more critical. Future-ready Policy Centers will:

- Flag policy conflicts or violations in real time

- Suggest owners or reviewers based on activity metadata

- Enable explainability for AI-driven policy enforcement

- Serve as a system of record for audits and trust scoring

How Fresh Gravity Can Help

At Fresh Gravity, we help enterprises design and implement Transparent Policy Centers that are:

- Aligned with business goals and compliance mandates

- Integrated with metadata, catalog, and access platforms

- Augmented with automation and stewardship intelligence

- Designed for adoption across both technical and business users

We work with leading data platforms and tailor solutions to your stack — whether you’re using Snowflake, Databricks, Informatica, Alation, or others.

Ready to Make Data Governance Transparent?

Let’s move governance from a black box to a shared, trusted, and automated experience.

Reach out at www.freshgravity.com/contact-us/

A Master Data-Led Approach to CMC Data Strategy

October 9th, 2025 WRITTEN BY FGadmin

Written By Preeti Desai, Sr. Manager, Client Success and Colin Wood, Strategy & Solutions Leader, Life Sciences

In the previous blog, we established that we can no longer afford to treat CMC data as something created just for submissions. This data holds immense operational and strategic value for analytics, process optimization, and automated regulatory submissions. But to unlock that value, data quality and structure are paramount.

We also looked at how implementing a CMC data model — a foundational framework that organizes and links entities such as materials, manufacturing processes, test methods, and experiments transforms fragmented information into an integrated system of scientific truth

There’s growing enthusiasm in pharma to apply ontologies to CMC (Chemistry, Manufacturing, and Controls) and regulatory data, and for good reason. Ontologies can bring semantic meaning, relationships, and machine-readability to data models. But attempting to use ontologies to cleanse and standardize legacy data is often misguided and inefficient.

Anyone who’s dealt with legacy data knows how messy it can be:

- If the material code starts with ‘TMP’, it was temporary and might not be valid

- Between 2020–2022, we used different naming conventions

- Batch numbers used to include site codes, but now they don’t

These kinds of inconsistent business rules are often undocumented, approximated, and full of edge cases.

Now imagine trying to model all that historical inconsistency into an ontology. You’d have to:

- Capture every exception, outdated meaning, and local rule

- Maintain conflicting definitions and overlapping hierarchies

- Build logic that explains how things used to be, not just how they should be

This quickly becomes unmanageable and defeats the purpose of ontologies, which are meant to add clarity and meaning, not capture legacy confusion.

In an era where life sciences organizations are increasingly turning to knowledge graphs, ontologies, and semantic data layers to drive digital transformation, a foundational truth is often overlooked: You cannot infer meaning from data that lacks structural and referential integrity.

When it comes to structuring CMC data, the two most essential pillars are:

- A Blueprint: A data model that defines entities, relationships, and constraints

- A Backbone: A governing Master Data Management (MDM) system that ensures the reliability and consistency of that data across sources and lifecycles

Data Model Without MDM Is an Incomplete Scaffold

A data model is the abstraction of your CMC domain. It defines:

- What entities exist (e.g., Batch, Manufacturing Process Step, Test, Stability Study)

- What attributes they hold (e.g., batch expiry date, test name, manufacturing site code, stability study start date)

- How they relate (e.g., a drug product is a formulation composed of ingredients, which are substances, manufactured at specific sites)

But in practice, even the most elegant data model fails without high-quality data that populates it, which is where MDM comes in.

Without MDM:

- Entity uniqueness is compromised — e.g., the same material could be listed under 5 different names

- Hierarchy and versioning are ambiguous — e.g., which version of a manufacturing process step applies to which submission

- Data provenance is unclear — e.g., is the acceptance criteria for pH range sourced from R&D specs or commercial process validation

A model without governed data is like a periodic table filled with scribbles. The structure is there, but the contents are unreliable, so no inference can be made.

Why MDM Without a Data Model Leads to Confusion and Data Debt

On the flip side, deploying MDM without a foundational data model turns your MDM into a glorified data registry — a collection of fields with no semantic or structural consistency.

Let’s take an example:

Suppose you’re managing “Packaged Medicinal Product” as a master data domain. Without a model, this could be:

- A free-text field in ELN

- A picklist in SAP

- A coded term in a regulatory XML schema

- A synonym list held in a reference data system

Without a model defining the context and relationships — i.e., how Packaged Medicinal Product relates to Manufactured Item, Pharmaceutical Product, strength, route of administration, container, your MDM becomes disconnected fragments rather than a unified source of truth.

MDM provides governance. The data model provides structure for the data and allows business meaning to be assigned to each entity and attribute.

They are co-dependent — not optional.

Only when these two are in place does the third layer — ontologies and knowledge graphs — can begin to generate value. This delivers semantic meaning for the data, allowing richer insights to be inferred from the data.

The next blog in the series will highlight the growing importance of IDMP in the CMC data model with the recent ICH M4Q updates. We will also cover how organizations can confidently begin layering semantic technologies such as ontologies and knowledge graphs to unlock new capabilities in automation, compliance, and analytics. Stay tuned!

Beyond the Hype: Why Data Management Needs Smaller, Smarter AI Models

October 6th, 2025 WRITTEN BY FGadmin Tags: data management, smarter AI models

Written by Soumen Chakraborty, Vice President, Artificial Intelligence

Introduction

Over the past two years, Large Language Models (LLMs) have dominated the AI conversation. From copilots to chatbots, they’ve been positioned as the universal answer to every problem. However, as enterprises begin to apply them to their data management problems, the cracks are starting to show – high costs, scalability issues, and governance risks.

At Fresh Gravity, we’ve been asking a simple question: Do you really need a billion-parameter model to validate a single record, reconcile a dataset, or generate a SQL query?

Our conclusion: not always. In fact, for many of the toughest data management challenges, smaller, task-focused models (SLMs/MLMs) are often a better fit.

The Limits of LLMs in Data Management

LLMs are brilliant at broad reasoning and open-ended generation. However, when applied to day-to-day row/column-level data management, three major issues emerge:

- Cost Explosion: Running LLMs on millions of record-level checks quickly becomes unaffordable

- Scalability Problems: They’re optimized for rich reasoning, not for repetitive, structured operations

- Governance Risks: Hallucinations or inconsistent outputs can’t be tolerated in regulated industries

That’s why betting everything on LLMs is not a sustainable strategy for enterprise data.

Why Smaller Models Make Sense

Small and Medium Language Models (SLMs/MLMs) offer a different path. They don’t try to solve every problem at once, but instead focus on being:

- Cost-efficient – affordable to run at scale

- Task-focused – fine-tuned for narrow, high-value problems

- Fast – optimized for record-level operations

- More governable – easier to constrain in compliance-heavy environments

Smaller doesn’t mean weaker. It means smarter, leaner, and more practical for the jobs that matter most.

Real Models Proving This Works

The industry already has strong evidence that smaller models can shine:

- LLaMA 2–7B (Meta): Efficient and fine-tunable, great for SQL and mapping tasks

- Mistral 7B: Optimized for speed, yet competitive with much larger LLMs

- Phi-3 (Microsoft): A 3.8B parameter model with curated training data, surprisingly good at reasoning

- Falcon 7B: Enterprise-friendly balance of performance and cost

- DistilBERT/MiniLM: Trusted for classification, entity extraction, and standardization

These models show that parameter count isn’t everything. With fine-tuning, SLMs often outperform LLMs for specific, repetitive tasks in the enterprise.

The Art of Possibility for Data Management

Now imagine what this opens for data teams:

- Models that catch bad data before it spreads, flagging missing, inconsistent, or invalid records instantly

- AI that can spin up pipelines with built-in guardrails, reducing manual coding and human error

- The ability to compare massive datasets in seconds, highlighting mismatches at scale

- Smarter matching engines that can spot duplicates across millions of records without endless rule-writing

- Query tools that let anyone turn plain English into SQL or SparkQL, putting data access in everyone’s hands

This is where smaller, focused models excel, doing the heavy lifting of data management tasks reliably, quickly, and cost-effectively

Where SLMs and LLMs Each Fit

Data Mapping

- SLMs: Handle structured, repeatable mappings (CustomerID → ClientNumber)

- LLMs: Step in when semantic reasoning is needed

- Industry Reference: Informatica CLAIRE, Collibra metadata mapping

- Fresh Gravity Example: Penguin blends SLMs for bulk mapping, escalating edge cases to LLMs

Data Observation (Monitoring)

- SLMs: Detect anomalies and unusual patterns at scale

- LLMs: Interpret unstructured logs, suggest root causes

- Industry Reference: Datadog anomaly detection + Microsoft Copilot for Security

- Fresh Gravity Example: DevOps Compass uses anomaly models + SLMs for most alerts, with LLMs reserved for complex correlations

Data Standardization

- SLMs: Normalize structured fields like dates, codes, and units

- LLMs: Resolve ambiguity in free-text or unstructured notes

- Industry Reference: Epic Systems blends AI for ICD-10 coding vs. clinical notes.

- Fresh Gravity Example: Data Stewardship Co-Pilot utilizes SLMs for structured standardization and LLMs for free-text context.

Fresh Gravity’s Hybrid Approach

We see the future as a hybrid, not one-size-fits-all. At Fresh Gravity, we’re embedding this strategy into our accelerators:

- AiDE (Agentic Data Engineering): SLMs fine-tuned on SQL/Spark for pipeline generation; LLMs for complex design tasks.

- DevOps Compass: SLMs for log parsing and anomaly detection; LLMs for deeper root cause analysis.

- Data Stewardship Co-Pilot: SLMs for reconciliation, matching, and standardization at scale; LLMs for context-heavy edge cases.

This isn’t theory — we’re running these experiments today to prove value with real-world performance and cost data.

Why Hybrid Wins

Our philosophy is simple:

- LLMs where broad reasoning is essential

- SLMs where scale, efficiency, and cost control matter most

The result is:

- Enterprise-ready scalability without runaway costs

- Predictable, fast performance for record-level tasks

- Client empowerment to deploy in the cloud or on-prem, affordably

Conclusion

LLMs have expanded the boundaries of what AI can do. But in data management, the future isn’t about “bigger models.” It’s about smarter combinations of models that fit the task at hand.

By blending the power of LLMs with the efficiency of SLMs, we can build solutions that are innovative, scalable, and sustainable.

At Fresh Gravity, that’s exactly what we’re doing: embedding hybrid AI into our accelerators so our clients don’t just chase the hype — they see real, lasting business outcomes.

Beyond the Dossier: Unlocking Strategic Value in CMC Data

September 23rd, 2025 WRITTEN BY FGadmin Tags: artificial intelligence, CMC data

Written By Preeti Desai, Sr. Manager, Client Success and Colin Wood, Strategy & Solutions Leader, Life Sciences

In the world of bio-pharmaceutical development, Chemistry, Manufacturing, and Controls (CMC) is often described as the regulatory backbone of any product submission. Yet, despite its critical role, CMC remains one of the most underutilized, least digitized, and most manually intensive areas in the product development lifecycle.

In recent years, the pharmaceutical industry has shifted focus from merely digitizing documentation to treating data as a core business asset. As regulatory expectations evolve and time-to-market pressures increase, structured CMC data is emerging as the new API — connecting R&D, manufacturing, and regulatory functions. More than just supporting faster submissions, CMC data lays the foundation that has the potential to inform accelerated drug development, enabling companies to learn from prior experiments, optimize processes, and reduce redundancy. When structured properly, this data becomes the substrate on which AI models, ontologies, large language models (LLMs), and knowledge graphs can operate, exponentially increasing its scientific and operational value.

In part one of this blog series, we will dive into the importance of leveraging CMC data and why it matters now more than ever.

CMC — and Why It is the Regulatory Backbone

CMC refers to the comprehensive set of data required by health authorities (like the FDA, EMA) to ensure the quality, safety, and consistency of a drug product. It spans the entire lifecycle — from raw materials and analytical methods to formulation, process development, and manufacturing controls.

CMC tells the technical narrative — one built on structured evidence. It proves that the product:

- Is made consistently, batch after batch

- Meets its defined specifications, every time

- Is safe and reproducible at scale, from the lab bench to the manufacturing line

It’s not just a compliance formality — it’s the foundation that gives regulators confidence, manufacturers direction, and patients trust.

Digitization in Modern CMC Submissions: The Investment Dilemma

While fully digital regulatory submissions are still several years away — with ICH M4 and related guidelines continuing to favor document-based formats — the industry’s momentum toward digitization is undeniable. This creates a dilemma for many pharmaceutical companies: Should they invest in digital infrastructure now, or wait for regulatory mandates to catch up?

Reluctance is understandable because, despite being data-rich, the CMC landscape is riddled with inefficiencies. From early-stage discovery to commercial production, teams grapple with:

|

Challenge |

Impact |

| Unstructured Documentation | Regulatory dossiers capture only the successful version of the product story, not the dozens (or hundreds) of failed experiments that informed it |

| Fragmentation across systems | Experimental data in ELNs (Electronic Lab Notebooks), training data in LMS (Learning Management Systems), analytical results live in LIMS or spreadsheets, protocols and other documents are stored across hard copies, SharePoint, email, or regulatory systems |

| Document-centric workflows | Final reports hide rich experimental context (failures, iterations, etc.). Negative data is lost, skewing success metrics. |

| Data stuck in non-machine formats | PDFs, Word files, emails; difficult for AI/ML systems to parse |

| Missing metadata & identifiers | Experiments lack standard IDs; test methods aren’t linked to parameters |

| Incomplete experimental records | Many ELN experiments are not signed off, falsely assumed as complete |

| Cultural resistance | Scientists prioritize experimentation, not metadata entry or tagging |

| No unified data model | No central data schema across formulation, process, and analytical units |

In short, CMC data exists, but it is invisible, scattered, and disconnected.

Missed Opportunities: Data Ignored Beyond Submissions

What’s often overlooked is that CMC documentation is merely a snapshot — the “final cut” of a much richer, iterative scientific process. In many organizations, once a submission is filed, the underlying data is:

- Archived and locked away

- Disconnected from future product lifecycle activities

- Ignored for cross-product learnings or platform optimization

- Unavailable for AI/ML model training or decision support systems

The future of CMC is not a better document. It’s a better data product. Companies that start treating CMC data as a core asset — not just a compliance output — will be the ones ready for the future, long before the future arrives.

The CMC Data Model – A Game Changer

AI thrives not on raw data — but on clean, structured, and semantically linked data — which is impossible without a robust data model and a strong Master Data Management (MDM) foundation. That’s what a modern CMC strategy should aim for. While digital submissions are still on the horizon, structured, traceable CMC data creates measurable value today and positions organizations to lead when the regulatory landscape inevitably evolves.

The shift toward structured, connected CMC data is more than a digital upgrade; it marks a paradigm shift in how pharmaceutical companies can derive scientific and operational intelligence across the value chain.

At the centre of this shift lies the CMC data model, a foundational framework that organizes and links entities such as materials, processes, test methods, and experiments. When implemented correctly, this model transforms fragmented information into an integrated system of scientific truth.

Discover how Fresh Gravity helps you streamline, manage, and submit this essential data with accuracy and compliance.

|

Entity |

Description |

| Materials | Raw materials, excipients, APIs — linked to suppliers, specs, test methods. Every material, method, and process parameter is traceable across trials and products. |

| Process Parameters | All critical steps, ranges, control strategies, and development history. Product development teams query the system to find which conditions led to failed batches in similar products. |

| Test Methods | Analytical methods used across stages, their validations, and associated data |

| Experiments | Each experiment ID in a submission links back to the full scientific dataset (ELN, LMS, LIMS). IDs linked to ELNs/LMS, showing both positive and negative outcomes. |

| Product Profiles | Target product quality attributes (TPPs, QTPPs), and supporting evidence |

Each entity is:

- Structured (machine-readable)

- Linked (e.g., experiment ID connects to ELN records)

- Queryable (can be filtered, aggregated, reported on)

- HL7 FHIR Aligned (supporting future digital submission standards)

This model becomes a central data hub, enabling:

- Faster submissions (Regulatory authors auto-generate sections of CTD from verified, structured data)

- Cross-functional collaboration (R&D ↔ Regulatory ↔ QA)

- AI assistants to recommend process improvements or analytical methods based on prior outcomes

Example: Tracking an Experiment ID from LIMS to Manufacturing Using a CMC Data Model

Step 1: Experiment Creation in R&D (LIMS/ELN)

- A formulation scientist runs an experiment to optimize pH and excipient concentration for a new oral solid dosage form

- The experiment is logged in LIMS and linked in ELN with a unique Experiment ID: EXP-2025-00321

- Associated data includes:

- API lot number

- Excipient types and suppliers

- Process parameters (mixing speed, granulation time, drying temperature)

- In-process control (IPC) results

- Stability data for early formulation prototypes

The CMC data model captures this under:

- Entity: Experiment

- Attributes: ID, author, timestamp, purpose, related material IDs

- Entity: Materials

- Attributes: API, excipients, batch IDs, specs

- Entity: Process Parameters

- Attributes: equipment, duration, ranges, outputs

Result: The Experiment ID becomes a unique anchor for linking structured formulation and process development data.

Step 2: Scale-Up & Manufacturing Transfer

- The optimized process is transferred to pilot-scale manufacturing.

- Key parameters from EXP-2025-00321 are used as a baseline for defining:

- CPPs (Critical Process Parameters) and

- CQA (Critical Quality Attributes)

- At this point, MES (Manufacturing Execution System) records:

- Actual process values (e.g., granulation time, drying profile)

- Equipment used

- In-process deviations

- Batch records and performance metrics

The CMC data model now links:

- Experiment ID → Pilot batch IDs → Full-scale batch IDs

- Shared materials, methods, and parameters across scales

From Data Product to Decision Engine

For the above example with EXP-2025-00321, structured CMC data linkage, the organization could explore the following use cases with the CMC data generated and linked accurately.

|

AI/Analytics Use Case |

How the CMC Data Model Enables It |

|

| Insights | How many experiments supported this target profile? What % of trials failed? Why? Where are the gaps? What’s pending sign-off? | |

| Root Cause Analysis | If a commercial batch fails, AI traces back to EXP-2025-00321 and identifies parameter drift or raw material variability | |

| Predictive Modeling | Train models using historical experiment-to-batch mappings to predict yield, dissolution, or stability outcomes | |

| Process Optimization | AI identifies which pilot-scale parameters most strongly influenced product quality and recommends adjustments | |

| Formulation Reuse | Enables scientists to query: “Which previous formulations with similar APIs succeeded under similar conditions?” | |

| LLM-Enhanced Decision Support | A language model can be prompted: “Summarize all experiments linked to pilot batch BATCH-00215 that led to stability failures.” | |

While this blog offers only a high-level overview, the data model conceptualized by Fresh Gravity is significantly more detailed and comprehensive — built to support data structure complexity, regulatory alignment, and long-term scalability. If you’d like to explore the full scope of the model and its practical applications, get in touch with us.

In the next blog, we will dive deeper into how Master Data Management (MDM) systems and IDMP-aligned reference models can enhance this vision — particularly through the lens of ICH M4Q analysis. We’ll explore how aligning M4Q elements with IDMP concepts (like pharmaceutical product, manufactured item, and packaging) creates a more robust, interoperable data model — one that can serve both compliance needs and digital innovation.

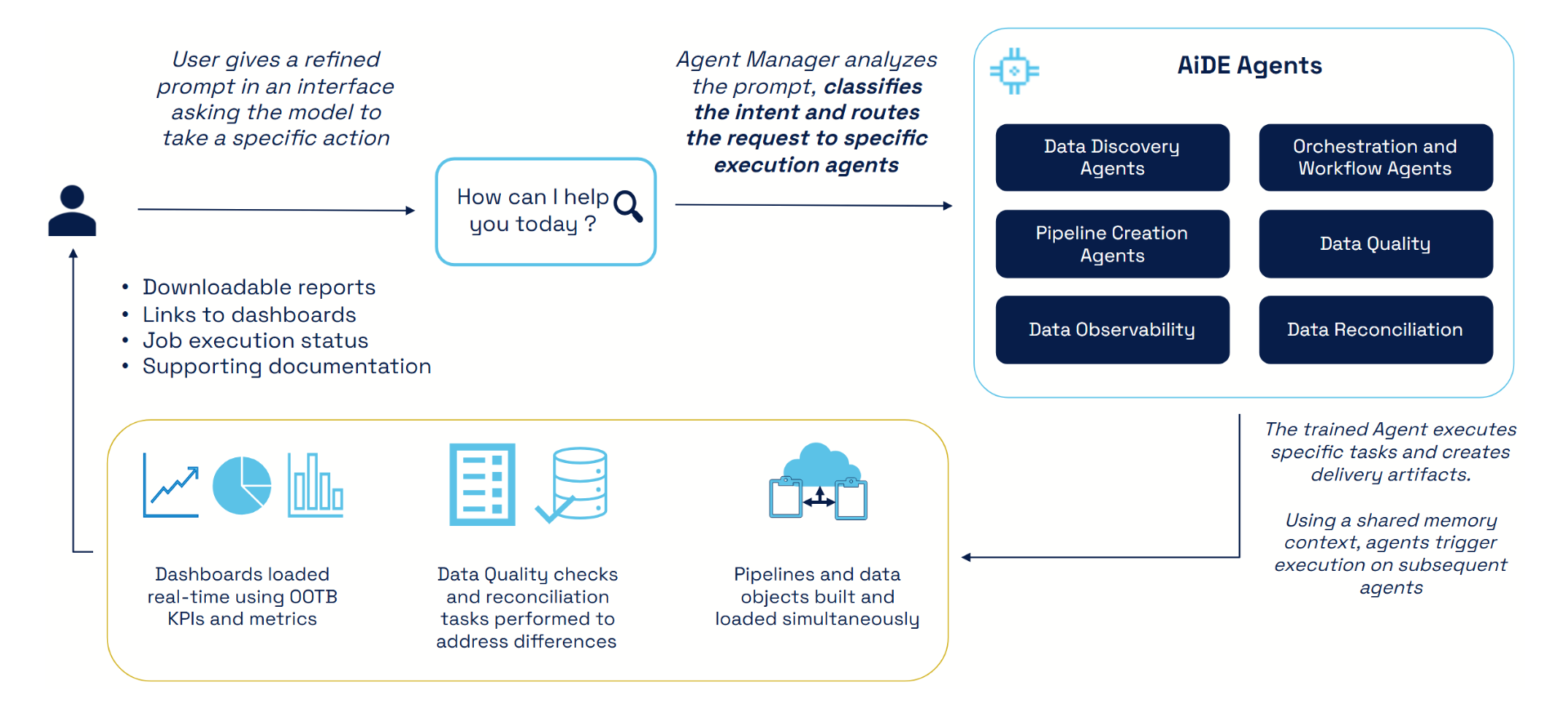

The Path to Agentic intelligent Data Engineering

September 17th, 2025 WRITTEN BY FGadmin Tags: agentic AI, AI agents, artificial intelligence, Data Engineering, improved data quality

Written by Siddharth Mohanty, Director, Data Management

Evolving Agentic AI Landscape

- Artificial intelligence (AI) is developing quickly, moving from basic assistants to self-governing systems that can act and make decisions with little human supervision.

- Generative AI, AI agents, agentic AI, and now the Model Context Protocol (MCP), a ground-breaking framework that standardizes how AI communicates with outside tools and data sources, have all been steps in this journey.

- Conventional generative AI was proficient at producing text, graphics, and code, but it lacked autonomy at its core. This gap is filled by agentic AI, which uses generative capabilities to allow for truly autonomous action.

With a keen focus on the rapidly evolving field of artificial intelligence, we, at Fresh Gravity, have created a novel approach to using AI to create dependable and scalable data engineering solutions. Our vision of a future–ready data engineering solution represents a next-generation approach to building, automating, and optimizing data workflows by leveraging LLM and AI agents to act intelligently and autonomously throughout the data lifecycle. This blog is a deep dive into one such proprietary solution built by Fresh Gravity – Agentic intelligent Data Engineering (AiDE) solution.

AI Helping Make More Data Become Better Data

With key characteristics such as autonomy, goal-oriented execution, adaptability, multi-agent collaboration and integration with tools, AI agents come power-packed to alleviate complexity and manual overhead. Key capabilities of AI Agents include:

- Operational Efficiency: Automation of repetitive processes like data entry, purification, and validation, agentic AI improves operational efficiency. Batch and live ingestion operations are coordinated through data pipeline optimization. Schemas are dynamically modified in response to patterns found. Agents maintain data integrity across systems, optimize queries for speed and cost effectiveness, and keep an eye on warehouse performance

- Increased Accuracy: By fusing the accuracy of traditional programming with the flexibility of large language models (LLMs), agentic AI can increase accuracy and precision and make better judgments depending on context and real-time data. Agents can examine large datasets, find inefficiencies in workflows, and spot anomalies without human involvement thanks to live analytics.

- Improved data quality: Via intelligent monitoring and validation. Fresh Gravity’s AiDE comes powered with Data Quality, Data Reconciliation, and Data Observability agents designed to monitor data and pipelines to detect data discrepancies. AiDE has Data Quality and Observability Dashboards OOTB, providing real-time insights

- Real-time Insights: AI agents can be designed to continuously profile incoming data, analyze patterns, offer real-time insights, and identify trends

- Optimized Performance: Agentic AI effectively manages large-scale data environments, adapting to increasing volumes and complexities Agentic AI effectively manages large-scale data environments, adapting to increasing volumes and complexities.

- Enhanced Compliance and Risk Management: This is achieved by developing models and agents to deploy policies and rules into execution. For example, we have built agents to take specific actions while processing PII data elements.

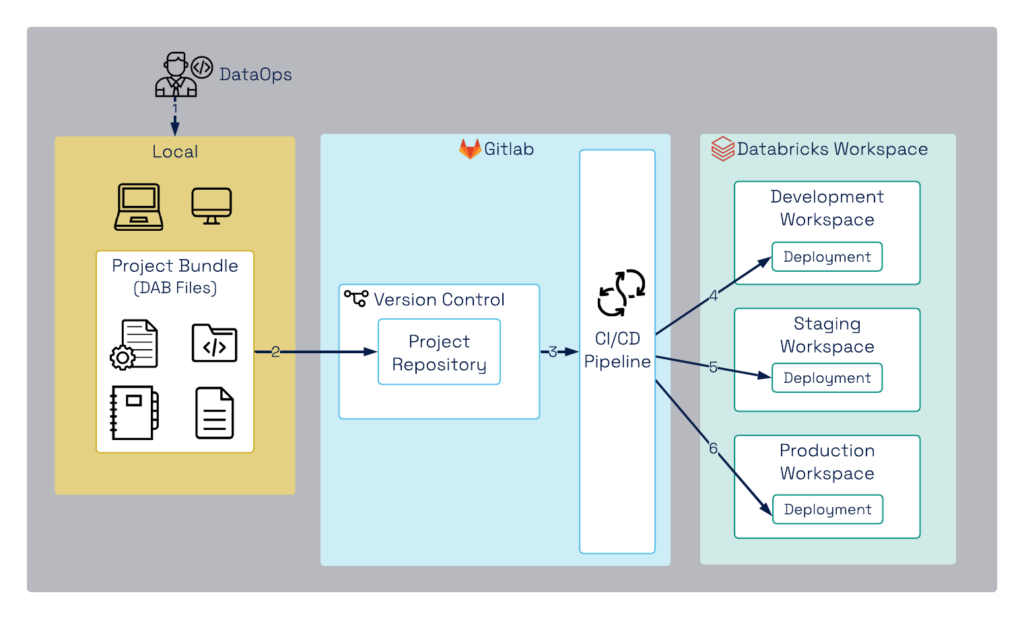

AI Agents Powering Data Engineering

Fresh Gravity’s AiDE solution accelerates the data engineering ecosystem by intelligently automating repetitive operations and providing AI-powered tools with the ability to effectively build, maintain, and improve data pipelines, thereby reducing the responsibilities of data professionals. These improvements are achieved by using AI Agents to bring efficiency gains, as listed in the earlier section, across the data engineering life cycle. These models are already proficient in debugging, optimization, and generating Python and SQL code, and their capabilities are expanding daily.

From making raw data understandable to integrating, coordinating, and automating heterogeneous data into AI operations, data engineers are now at the vanguard of the AI movement.

Fresh Gravity’s Agentic Data Engineering Solution

AI-driven Data Engineering is quickly shifting from trend to necessity, driven by the need for adaptability, speed, operational excellence, and insights at scale. By embedding intelligence throughout the data pipeline, organizations can innovate more quickly, reduce costs, mitigate risks, and lay the groundwork for the future of data-driven decision-making.

We have developed a one-of-a-kind AI-powered Data Engineering packaged solution to address all facets of a data solution (data pipelines development, data discovery, audit & control framework, data quality and reconciliation, orchestration and workflow management, data observability), Agentic intelligent Data Engineering (AiDE), an AI-powered smart and intelligent solution packaging a suite of agents performing data discovery, data ingestion, orchestration, execution, data validation, monitoring and observability.

AiDE comes prepacked with code templates and coding standards with audit features enabled Out-of-The-Box (OOTB). Data Engineers can define the interfacing of source data systems with AiDE and, through a smart prompt, provide the AI model with context to discover data, develop pipelines, create audit table entries, execute pipelines, perform QC on data executions, and build operational dashboards for observability of the workload.

AiDE also comes with a Human-In-The-Loop (HITL) framework to incorporate business/domain-specific data processing logic into the pipelines. All data pipelines created with AiDE follow our in-house core design principle of a Metadata-Driven Framework (MDF), ensuring extensibility and flexibility by design for every artifact that gets delivered.

To know more about building performant data products and solutions on-premises or on cloud, please write to us at info@freshgravity.com.

From Data to Decisions: Discover ZoomInfo’s Ready-to-Use Reltio MDM Integration

September 11th, 2025 WRITTEN BY FGadmin Tags: b2b, b2c, customer data management, data enrichment, data management, data quality assurance, master data management, Reltio, RIH, ZoomInfo

Written by Ashish Rawat, Sr. Manager, Data Management

In customer data management, where data–driven business decisions are pivotal, effectively harnessing and utilizing data is a necessity. Availability of data is no longer a problem for firms, but the identification of relevant information among vast amounts of data is certainly a puzzle. To address this critical need, Fresh Gravity, in partnership with Reltio Inc. and ZoomInfo, has developed a pre-built integration between Reltio and ZoomInfo, providing seamless data enrichment of your enterprise Master Data.

Data enrichment empowers businesses to unlock the full potential of their customer data by layering in trusted insights from reliable third-party sources. Beyond simply filling gaps, enrichment adds critical attributes and relationships that transform raw records into complete, actionable profiles. In the context of Master Data Management (MDM), this process ensures organizations are working with accurate, consistent, and up-to-date information. The result is higher data quality that drives smarter business decisions, supports regulatory compliance, and enables personalized, customer-centric experiences that fuel growth and loyalty. This pre-built integration is designed and developed to enrich customer data, both for the B2B and B2C dataspace.

- The B2B dataspace encompasses critical customer information about organizations, extending far beyond basic identifiers. These datasets typically include firmographic details (such as industry, size, and location), financial metrics, corporate hierarchies, and other key attributes that provide a 360° view of a business. When structured and managed effectively, this information becomes the foundation for stronger customer insights, improved segmentation, and more informed decision-making across the enterprise.

- The B2C dataspace centers around individual consumer information, bringing together data that enables a deeper understanding of people and their behaviors. This often includes demographic details (age, gender, income, location), contact information, behavioral signals (such as purchasing patterns, digital interactions, and channel preferences), along with lifestyle and interest indicators. When managed effectively, these data points provide organizations with the insights needed to segment audiences, deliver personalized experiences, and drive stronger engagement and conversion.

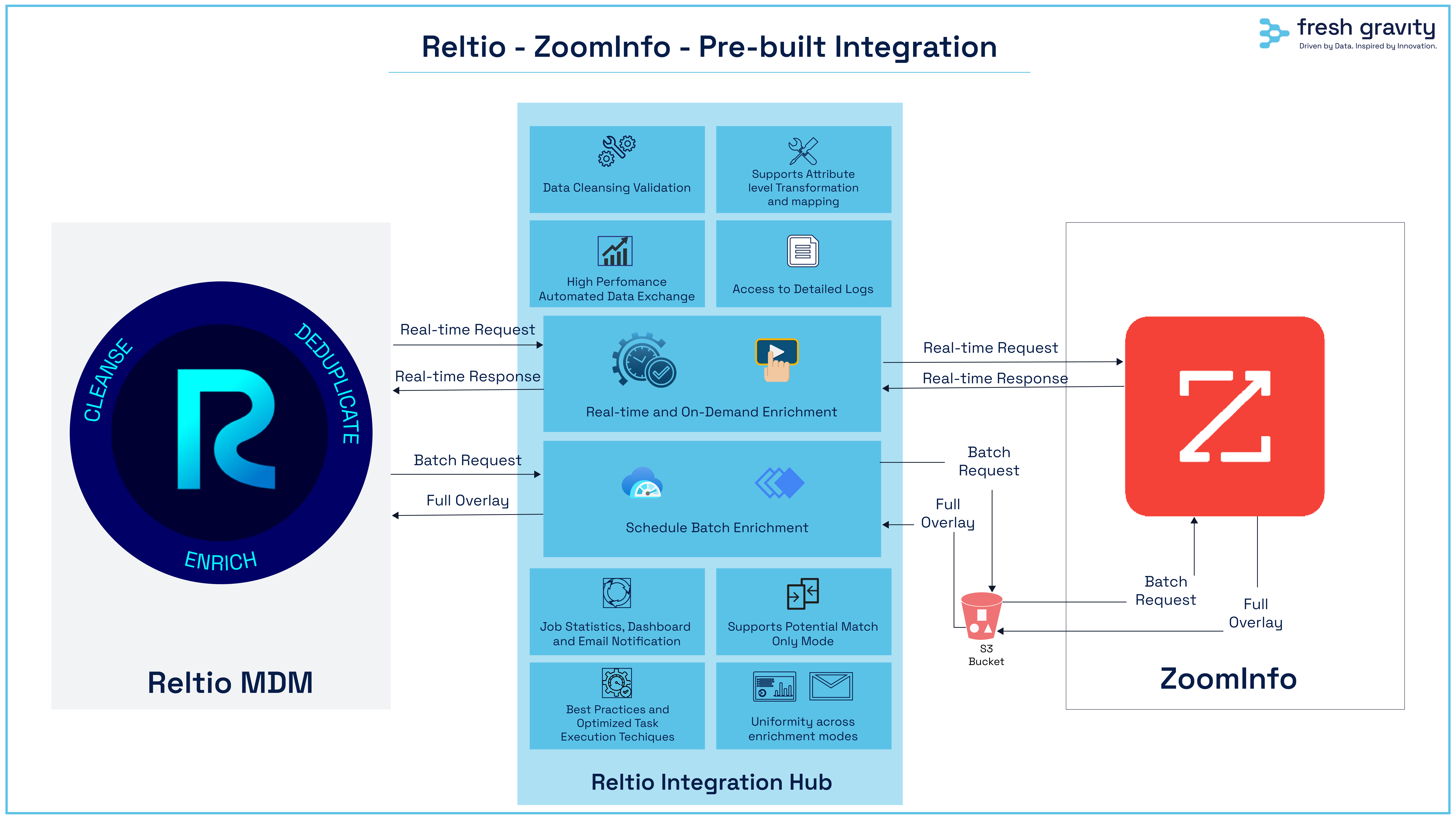

Reltio-ZoomInfo Pre-Built Integration

This integration is designed in accordance with Reltio’s Customer Data (B2B & B2C) velocity pack, which maps ZoomInfo data points to industry–specific data models. It also empowers the user to customize the integration to fulfill their business needs. This integration is built on top of Reltio Integration Hub (RIH), which is a component of the Reltio Connected Customer 360 platform.

This pre-built integration supports the following modes of data enrichment:

- Batch enrichment with a scheduler or API–based triggers

- Real–time enrichment leveraging Reltio’s integrated SQS queue

- An API–based trigger for on-demand enrichment. This can be useful for UI button-based integration

- Monitoring an automated process to ensure records registered for regular updates are constantly refreshed

Why Fresh Gravity + ZoomInfo + Reltio = Smarter Customer Data

When it comes to mastering customer data, success isn’t just about connecting systems—it’s about doing it the right way. That’s where Fresh Gravity’s expertise in Master Data Management (MDM) and our integration with ZoomInfo’s global database come together to deliver unmatched value.

Our approach is grounded in implementation best practices, ensuring every deployment is reliable and future-ready. By seamlessly connecting with ZoomInfo’s expansive and trusted dataset, organizations can enrich their records with highly accurate, comprehensive insights that fuel better decisions.

Performance is another cornerstone. With optimized RIH recipes, businesses can expect lightning-fast processing, efficient task utilization, and rock-solid reliability—even when handling high-volume data environments. And because data needs evolve as companies grow, the solution is built with scalability at its core, supporting everything from routine updates to large-scale enrichment projects.

What truly sets this integration apart is the effortless setup and smooth alignment with Reltio MDM, minimizing disruption while delivering immediate value. Once in place, users benefit from an intuitive interface that simplifies complex data operations, allowing teams to focus on insights rather than mechanics. On top of that, detailed logs, real-time statistics, and automated notifications provide complete transparency, so teams are always informed and in control.

In short, this isn’t just an integration—it’s a smarter, faster, and more scalable way to unlock the power of enriched data and take customer intelligence to the next level.

Why This Integration Delivers More Than Just Data

At the heart of this integration is a set of powerful features designed to simplify, optimize, and future-proof the way organizations manage enrichment.

- The integration takes a unified approach to data, bringing both B2B and B2C enrichment into a single solution. Businesses also gain the flexibility to run enrichment in potential match only mode, ensuring accuracy when exact matches aren’t available.

- The platform is highly configurable, with customizable transformations and properties that make it easy to adapt to unique business needs. Built with scalability in mind, it incorporates RIH task optimization and Workato-approved best practice recipes to deliver performance and reliability, even under demanding workloads.

- Maintaining trust in data is just as important as enriching it, which is why the solution includes recertification capabilities to continuously validate accuracy over time. To support transparency and control, teams can access detailed logs, real-time statistics, and proactive email notifications, keeping them fully informed at every step.

Together, these features create a foundation that not only enriches data but also makes it more trustworthy, actionable, and aligned with business goals.

Key Technologies Powering the Reltio-ZoomInfo Integration

Reltio MDM: Connected Data Platform

Reltio is a cutting-edge Master Data Management (MDM) solution that enables an MDM solution with an API-first approach. It offers top-tier MDM capabilities, including Identity Resolution, Data Quality, Dynamic Survivorship for contextual profiles, and a Universal ID for all operational applications. It also features robust hierarchy management, comprehensive Enterprise Data Management, and a Connected Graph to manage relationships. Additionally, Reltio provides Progressive Stitching to enhance profiles over time, along with extensive Data Governance capabilities.

Reltio Integration Hub: No-Code, Low-Code Integration Platform

Reltio offers a low-code/no-code integration solution, Reltio Integration Hub (RIH). RIH is a component of the Reltio Connected Customer 360 platform, which is an enterprise MDM and Customer Data Platform (CDP) solution. RIH provides the capabilities to integrate and synchronize data between Reltio and other enterprise systems, applications, and data sources.

ZoomInfo

ZoomInfo is a leading go-to-market intelligence platform that empowers businesses with accurate, real-time data on companies and professionals. By combining firmographic, demographic, and behavioral insights with advanced analytics, ZoomInfo helps organizations identify the right prospects, enrich customer records, and accelerate revenue growth. Its trusted datasets and automation capabilities make it a go-to solution for driving smarter marketing, sales, and data management strategies.

Join the Data Revolution

Ready to take your data strategy to the next level? Discover the full potential of the new ZoomInfo Integration for Reltio MDM, designed and developed by Fresh Gravity. Contact us for a personalized demo or to learn how this revolutionary tool can be a game-changer for your business.

For a demo of this pre-built integration, please write to info@freshgravity.com

Measuring CDO Impact Beyond ROI

June 30th, 2025 WRITTEN BY FGadmin Tags: data governance, data quality, ethical data use, process automation, structured data

Written by Monalisa Thakur, Sr. Manager, Client Success

The Million Dollar Mistake That Never Happened

In 2022, a major U.S. financial institution found itself under federal investigation following concerns that its mortgage approval algorithms were unintentionally discriminating against an important segment of the population. Internal data showed that Black and Latino borrowers were disproportionately denied refinancing, despite qualifying under federal relief programs during the pandemic.

This early action helped the company course-correct. It paused the flawed model’s rollout, revised its decision logic, and introduced bias checks and review frameworks. While other banks were grappling with regulatory fines and public backlash, this institution quietly sidestepped disaster.

There was no headline saying, ‘million dollars saved’. But reputational damage was avoided. Legal costs were minimized. And most importantly, trust — arguably the institution’s most valuable asset — was preserved.

This was a data governance failure and a data governance success. Governance processes failed to surface potential fairness issues in how the algorithm was applied and evaluated. There were no clear audit mechanisms or stakeholder review gates in place. It took executive vigilance to fill the gap. Had ethical oversight and bias checks been embedded earlier, the issue might never have progressed so far.

This is exactly why the Chief Data Officer (CDO) role matters—even when it’s unofficial. In this case, it was a senior data leader, not yet titled CDO, who caught the flaw. While data governance has traditionally focused on data quality, consistency, and policy enforcement, its scope is expanding. Today’s CDOs are increasingly expected to steward not just structured data, but also the fairness, transparency, and accountability of data used in AI and algorithmic models. Flagging risks early and embedding ethical guardrails is becoming a part of the modern CDO’s responsibility. Sometimes, the most important data wins are the scandals that never make the news.

The ROI Obsession: Why We Need a New Metric for CDO Success

Organizations love numbers. It’s why traditional CDO performance metrics revolve around tangible outcomes:

- Revenue growth from data-driven initiatives

- Cost savings through process automation

- Increased data quality and governance efficiency

These are important things. But they don’t tell the full story. The real power of data leadership isn’t just in making money—it’s in shaping a culture that leverages data responsibly, ethically, and innovatively.

Yet, CDOs like the one in our story often find themselves fighting for recognition because their success is measured only in visible returns. The invisible value—employee morale, trust in data, risk mitigation, and innovation culture is harder to quantify. The challenge is not that these outcomes are ignored, but that they often lack consistent ways to measure or attribute.

The CDO Scorecard: Measuring What Matters

Behind every responsible data decision is a CDO—or someone playing that role—trying to make the invisible visible. Let’s reframe success. A truly impactful CDO Impact Scorecard should reflect not just business gains, but cultural shifts and ethical strength.

-

Trust in Data

Scenario: Imagine a global retailer where frontline employees rely on intuition, leading to inconsistent decisions. The CDO initiates a data literacy program, helping teams understand and trust insights. A year later, 80% of managers make data-driven decisions confidently.

Metrics: Increase in data usage, as captured via employee surveys, tool adoption rates, and training completion metrics, implies greater trust in data.

-

Room to Innovate

Scenario: At a healthcare startup, the CDO champions data sandboxes—secure environments where teams can test AI models without risk. Within six months, a junior analyst discovers a predictive trend that improves patient outcomes, sparking new product ideas.

Metric: Number of successful pilot projects or innovative use cases emerging from data teams.

-

Ethical Leadership

Scenario: A social media giant faces scrutiny over its data practices. Its CDO proactively establishes a transparency framework, making privacy policies clear and engaging external ethics boards. Public perception shifts from skepticism to trust.

Metric: Brand sentiment analysis linked to data privacy and ethics.

-

Risk Mitigation

Scenario: An airline CDO identifies inconsistencies in maintenance data that could lead to safety risks. Fixing them prevents a potential regulatory violation, avoiding millions in fines and reputational loss.

Metric: Number of risks mitigated before escalation.

-

AI Accountability & Fairness

Scenario: Imagine a retailer rolling out an AI-powered recommendation engine. Early results looked promising until a CDO-led review revealed the training data lacked representation from focused customer groups, leading to biased outcomes. The CDO introduced governance checks for all AI models, including fairness audits and documentation standards. The model was retrained, trust improved, and a company-wide responsible AI framework was established.

Metric: Number of AI models governed through fairness reviews; frequency of training data audits for bias.

Real Stories, Real Impact.

CDOs shouldn’t constantly prove their value in revenue figures alone. They champion fairness, build resilience, and create a culture where data helps people do the right thing.

That data executive in the story at the beginning of this blog wasn’t just a technical guardian—she was a protector of trust, a promoter of fairness, and a culture-builder. And that’s what impactful data leaders do.

While the previous story was inspired by real events, the following documented cases show how CDOs—or those in equivalent roles—create meaningful, lasting impact:

- Preston Werntz (Chief Data Officer, CISA) emphasized the importance of addressing bias in AI datasets, ensuring models are fair and trustworthy, and preventing reputational damage before it occurs.

- The U.S. Department of Education launched a data literacy initiative, empowering employees to make informed, data-driven decisions, showcasing the long-term benefits of investing in data culture.

- Richard Charles (CIO, Denver Public Schools) tackled bias in AI systems by implementing rigorous oversight, ensuring ethical data use that fosters trust and transparency.

- The Chief Data Officer Council developed a Data Ethics Framework for federal agencies, proactively mitigating risks and ensuring responsible data practices, demonstrating the importance of CDOs in crisis prevention.

Many organizations still don’t have CDOs. CIOs, CFOs, even COOs play this role quietly—but meaningfully. The title doesn’t matter as much as the mindset.

Where Fresh Gravity Fits In

At Fresh Gravity, we understand that the success of a data leader goes far beyond dashboards and KPIs. While we don’t supply CDOs directly, we help lay the foundation for them to thrive.

We work with organizations to:

- Design and establish the Data Office or CDO function, with clear mandates, roles, and governance models.

- Define and track qualitative KPIs that reflect data trust, innovation readiness, and literacy

- Help set up DG operating models to ensure the CDO has cross-functional influence and visibility.

- Help leaders (CDOs or otherwise) track the value of data programs—even when it’s not immediately financial

Whether your data leadership lies with a CDO, CIO, or an evolving role, we bring the experience, empathy, and tools to make your data vision sustainable.

Want to explore how intangible data value can be measured in your organization? Or how to demonstrate data governance impact through smart KPIs? Reach out to us—we’d love to share ideas, templates, and frameworks to get you started.

The Real Takeaway

Executives must embrace a broader view of data leadership. Boards and CEOs should:

✔️ Align CDO KPIs with long-term strategic goals, not just immediate returns

✔️ Recognize that intangibles create competitive advantage

✔️ Start tracking and rewarding cultural and ethical impact

Because in the end, the most valuable things a CDO brings to the table—trust, resilience, and innovation—are often the hardest to measure, but the most important to the governance of data.

Getting Started with Agentic Architecture

June 24th, 2025 WRITTEN BY FGadmin Tags: agentic AI, artificial intelligence, autonomous agents, large language model, modern AI, multi-agent architecture

Written By Soumen Chakraborty, Vice President, Artificial Intelligence

As large language models (LLMs) like GPT-4.x, Claude, and Gemini continue to evolve, the question shifts from “What can the model do?” to “How can we make it work as part of a system?” That’s where agentic architecture comes in — a design pattern that enables multiple intelligent agents to plan, reason, and act toward a goal, often using shared tools, memory, and context.

This is the 1st part of a blog series that will demystify agentic architecture, break down its core components, and set the foundation for building real-world applications using this powerful design. In this blog, we will cover the following topics under agentic AI: concepts, stages, and how to build it.

What Is Agentic Architecture?

Agentic architecture refers to a system design in which autonomous agents, powered by LLMs or other models, operate in coordination to solve complex tasks. Each agent is given:

- A role (e.g., researcher, summarizer, executor)

- A goal (defined by a user or system)

- Access to tools (e.g., search APIs, vector stores, databases)

- Memory and context (either persistent or task-specific)

Rather than a single monolithic LLM response, agentic systems operate more like a distributed AI workforce.

What are the Key Components of Agentic Architecture?

- Agents

Intelligent, task-driven entities that can reason, act, and communicate. Examples:- A document parser or extractor agent

- A data normalizer or standardizer agent

- A data quality validator agent

- A SQL or SPARQL query generator agent

- A summarizer or insight generator agent

- Model Context Protocol (MCP) Server (Control Plane)

Orchestrates agent workflows, manages state, routes tasks, and handles memory/context. - Memory Store

Shared memory or knowledge base (e.g., vector DB, Redis) that agents can read/write to. - Tool/Function Registry

A curated list of tools agents can use, such as APIs, databases, and external plugins. - Task Queue & Execution Engine

A flow engine that sequences agent calls and handles dependencies, retries, and fallbacks.

Why Agentic Architecture?

|

Traditional LLMs |

Agentic AI Systems |

|

One-shot response |

Multi-step reasoning & execution |

|

No memory |

Persistent, shared context |

|

Hard to scale |

Composable, modular agents |

| Black box |

Transparent workflows with logs |

Agentic design brings structure, modularity, and goal orientation to LLM-based workflows.

Analogy: The AI Startup Team

Imagine building a startup:

- The CEO agent sets the strategy (goal decomposition)

- The Research agent gathers information

- The Engineer agent builds features

- The QA agent tests the output

Each agent has a specific role but works toward a common goal, all of which are coordinated by a central platform, the MCP.

Example Use Case: Life Sciences Document Copilot

- User Input: “Summarize all supply chain issues related to active trials.”

- Agents Involved:

- Entity extractor

- KG query generator

- Summarizer

- Validator

- Outcome: A multi-agent pipeline retrieves, processes, and delivers a rich, context-aware answer.

How RAG, Tools, and Agents Work Together in Modern AI Systems

In agentic architectures, you’ll often hear about RAG (Retrieval-Augmented Generation), Tools, and Agents. While these may sound technical, each plays a unique role, and together, they power intelligent, context-aware, action-driven systems.

- RAG brings in the right context by grounding the AI in relevant, up-to-date information.

- Tools provide real-world capabilities, enabling the AI to take actions beyond just generating text.

- Agents are the orchestrators—they plan, reason, and act to achieve predefined goals by leveraging both RAG and tools.

In essence, RAG supplies the knowledge, tools enable the actions, and agents drive the intelligence that ties it all together.

Sample Workflow:

A Data Steward Agent receives a task to verify customer data inconsistencies.

- RAG: The agent uses RAG to retrieve past resolutions or policies from enterprise documents.

- Tools: It then calls a data validation tool or executes a transformation via an API.

- Agent Logic: Based on the outcome, the agent decides whether to escalate, auto-resolve, or ask for SME input.

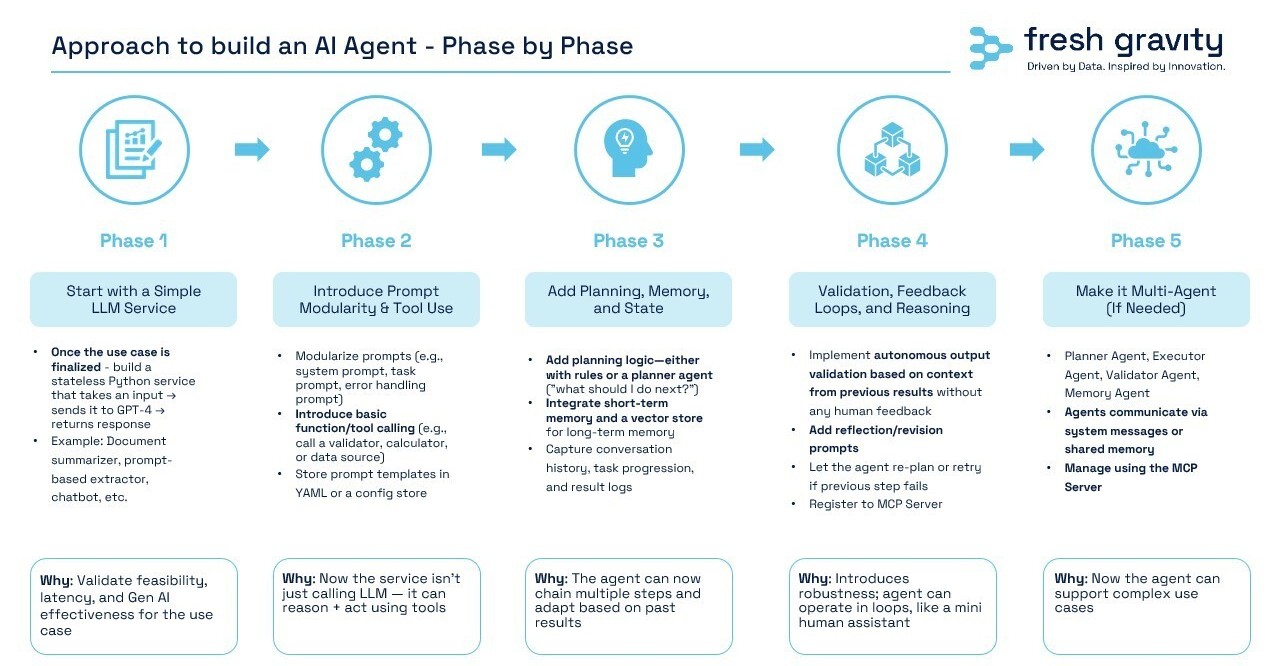

Approach to build an AI Agent – Phase by Phase

This 5-phase framework outlines a structured path to evolve from simple LLM-based solutions to intelligent, multi-agent systems capable of autonomous task execution.

- Phase 1 – Basic LLM Service:

Start with a stateless GPT-based service to validate feasibility and GenAI performance. - Phase 2 – Prompt Modularity & Tool Use:

Modularize prompts and integrate external tools to enable reasoning beyond text. - Phase 3 – Add Planning, Memory & State:

Introduce planning logic, memory (short-term & vector), and task tracking for adaptive, multi-step workflows. - Phase 4 – Feedback Loops & Validation:

Implement autonomous validation and revision via feedback (automated + human-in-the-loop). - Phase 5 – Multi-Agent Architecture:

Deploy specialized agents (Planner, Executor, Validator) that collaborate through a shared orchestration layer.

What’s Next?

Agentic architecture isn’t just a research concept — it’s a powerful blueprint for building intelligent, explainable, and collaborative AI systems.

As a next step, you should know how the brain of this architecture works. In the upcoming blogs, we will explore how to build an MCP server using FastAPI, register agents, and run your first multi-agent flow. Stay tuned.

Fresh Gravity’s AI capability is fueled by our top-tier AI engineering team is ready to support your journey in building intelligent agents. Feel free to reach out if you’d like to explore these topics further or see a live demo in action.

A 10-Minute Guide to the Evolution of NLP

May 5th, 2025 WRITTEN BY FGadmin Tags: AI, GenAI, machine learning, natural language processing, NLP, structured data, use cases

Written by Sudarsana Roy Choudhury, Managing Director, Data Management

What is NLP?

Natural Language Processing (NLP) is a subfield of artificial intelligence and machine learning that focuses on the interaction between computers and human (natural) languages. It involves a range of computational techniques for the automated analysis, understanding, and generation of natural language data. Organizations across domains such as finance, healthcare, e-commerce, and customer service increasingly rely on NLP to extract insights from large volumes of unstructured data—such as textual documents, social media streams, and voice transcripts.

Key NLP tasks include tokenization, part-of-speech tagging, named entity recognition, syntactic parsing, sentiment analysis, and text classification. Advanced models, such as transformers (e.g., BERT, GPT), enable contextual understanding and generation of human-like responses. Real-time processing pipelines often integrate NLP models with stream processing frameworks to support use cases like chatbots, virtual assistants, fraud detection, and automated document processing. The ability to interpret, analyze, and respond to natural language inputs in real time is now a critical capability in enterprise AI architectures.

The positive impact of NLP has already started to be evident across the industry. NLP technologies have many uses today, from search engines and voice-activated assistants to advanced content analysis and sentiment understanding. The use cases vary by industry. Some of the very prominent use cases are

- Healthcare – e.g. Precision Medicine, Adverse Drug Reaction (ADR), Clinical Documentation, Patient Care and Monitoring

- Retail – e.g. Product Search and Smart Product Recommendations, Sentiment Analysis, Competitive Analysis

- Manufacturing – e.g. Predictive Maintenance and Quality Control, Supply Chain Optimization with real-time language translation, Customer Feedback Analysis

- Financial Services – e.g., Automated Customer Service and Support, Fraud Detection, Risk Management, Sentiment Analysis and Customer Retention

- Education – e.g. Personalized Learning, Automated Grading and Assessment, Enhanced Student Engagement by using chatbots, Accurate Insights from Feedback

The Evolution

The origins of Natural Language Processing trace back to early efforts in machine translation, with initial experiments focused on translating Russian to English during the Cold War era. These early systems were largely rule-based and simplistic, aimed at converting text from one human language to another. This foundational work gradually evolved into efforts to translate human language into machine-readable formats—and vice versa—laying the groundwork for broader NLP applications.

NLP began to emerge as a distinct field of study in the 1950s, catalyzed by Alan Turing’s landmark 1950 paper, which introduced the Turing Test—a conceptual benchmark for machine intelligence based on the ability to engage in human-like dialogue.

In the 1960s and 1970s, NLP systems were primarily rule-based, relying on handcrafted grammar and linguistic rules for parsing and understanding language. The 1980s marked a significant paradigm shift with the advent of statistical NLP, driven by increasing computational power and the availability of large text corpora. These statistical approaches enabled systems to learn language patterns directly from data, leading to more scalable and adaptive models.

The 2000s and 2010s witnessed a revolution in NLP through the integration of machine learning techniques, particularly deep learning and neural networks. These advancements enabled more context-aware and nuanced language models capable of handling tasks like sentiment analysis, question answering, and machine translation with unprecedented accuracy. Technologies such as recurrent neural networks (RNNs), Long Short-Term Memory (LSTM) networks, and later transformer-based architectures (e.g., BERT, GPT) propelled the field into a new era of innovation.

Today, NLP continues to evolve rapidly, enabling applications in conversational AI, voice assistants, real-time translation, and automated document analysis—transforming the way humans interact with machines across industries.

NLP and the Rise of Generative AI