Exploring the AI Frontier in Data Management for Data Professionals

October 9th, 2023 WRITTEN BY Sudarsana Roy Choudhury - Managing Director, Data Management Tags: AI, Data, data management, Industry-agnostic

Written By Sudarsana Roy Choudhury, Managing Director, Data Management

The beginning of AI is shrouded with myths, stories, and rumors of artificial beings endowed with intelligence or consciousness by master craftsmen. One of the first formal beginnings of AI research was at a workshop held on the campus of Dartmouth College, USA during the summer of 1956. This was followed by an AI winter around 1974 when the US government withdrew all funding for AI research. This changed eventually when the Japanese government showcased major progress and heavily funded this field. The boom that we see today started in the first decade of the 21st century and of course, we are now at a point where AI impacts all areas of our lives and jobs.

AI has been a hot topic for many decades now but its relevance for all data professionals is more today than it has ever been in the past. Recently, I had the opportunity to moderate a panel discussion hosted by Fresh Gravity on “Exploring the AI Frontier in Data Management for Data Professionals’. The panelists for the discussion, a group of talented data professionals with vast knowledge and in-depth experience, were Ayush Mittal (Manager, Data Science & Analytics – Fresh Gravity), Siddharth Mohanty (Sr Manager, Data Management – Fresh Gravity), Soumen Chakraborty (Director, Data Management – Fresh Gravity), and Vaibhav Sathe (Director, Data Management – Fresh Gravity).

It was an opportunity to provide some thoughts and pointers on what we, as data professionals, should gear up on to be able to leverage various opportunities that AI-driven tools are providing and are expected to provide to enhance the value proposition we offer to our clients, help us perform our work smarter, and spend more time and effort on the right areas, instead of laboring over activities that can be done quicker and better by leveraging AI offerings.

To summarize I would like to list some key take aways from this insightful session –

- We all are experiencing the impact of AI in our everyday lives. The ability to harness and understand the nuances and be able to utilize the options (like personalized product suggestion in retail websites) can make our lives simpler, without allowing AI to control us

- Cybersecurity is a key concern for all of us. AI can detect and analyse complex patterns of malicious activity and quickly detect and respond to security threats

- Optimizing the use of AI can be a huge differentiator in delivering solutions for clients – where some of the tools that can be leveraged are StackOverflow, CoPilots, Google and AI driven data modelling tools

- For Data Management, with the huge volume and variety of data an organization has to deal with, the shift has already started. By using more AI-driven tools and services, organizations can ensure quicker insights, transformations, and movement of data across. This trend will only accelerate going forward

- AI will have a direct impact on improving the end user outcomes with speed of delivery and quality of data insights and predictions. What we see now is just the beginning of the huge shift in paradigm in the way value is delivered for the end user

- Establishing ethical usage of data and implementing Data Governance around data usage is key to AI success

- Everyone need not understand the code behind AI algorithms but should understand its core purpose and operational methodology. Truly harnessing the power of any AI system hinges on a blend of foundational knowledge and intuitive reasoning, ensuring its effective and optimal use

- Some upskilling and curiosity to learn are essential for each role (like Business Analysts, Quality Assurance Engineers, Data Analysts, etc.) to be able to take advantage of the AI-driven tools that are flooding the market and will continue to evolve

- While some of us may dream of getting back to an AI-less world, some are embracing the new AI-enabled world with glee! The reality is that AI is here to stay, and the way we approach and adapt to this revolution will determine whether we can benefit while staying within the boundaries of ethical limits

Fresh Gravity has rich experience and expertise in Artificial Intelligence. Our AI offerings include Deep Learning Solutions, Natural Language Processing (NLP) Services, Generative AI Solutions, and more. To learn more about how we can help elevate your data journey through AI, please write to us at info@freshgravity.com or you can directly reach out to me at Sudarsana.roychoudhury@freshgravity.com.

Optimizing Data Quality Management (DQM)

March 1st, 2023 WRITTEN BY Sudarsana Roy Choudhury - Managing Director, Data Management Tags: Data, data management, data quality management, DQM, Industry-agnostic, quality

Written By Sudarsana Roy Choudhury, Managing Director, Data Management

This is the decade for data transformation. The key is to ensure that data is available for driving critical business decisions. The capabilities that an organization will absolutely need are:

- Data as a product where teams can access the data instantly and securely

- Data sovereignty is where the governance policies and processes are well understood and implemented by a combination of people, processes, and tools

- Data as a prized asset in the organization – Data maintenance with high quality, consistency, and trust

The significance of data to run an enterprise business is critical. Data is recognized to be a major factor in driving informed business decisions and providing optimal service to clients. Hence, it is crucial to have good-quality data and an optimized approach to DQM.

As the volume of data in an organization increases exponentially, manual DQM becomes challenging. Data that flows into an organization is of high volume, mostly real-time and may change characteristics. Advanced tools and modern technology are needed to provide the automation that would drive accuracy and speed to achieve the desired level of data quality for an organization.

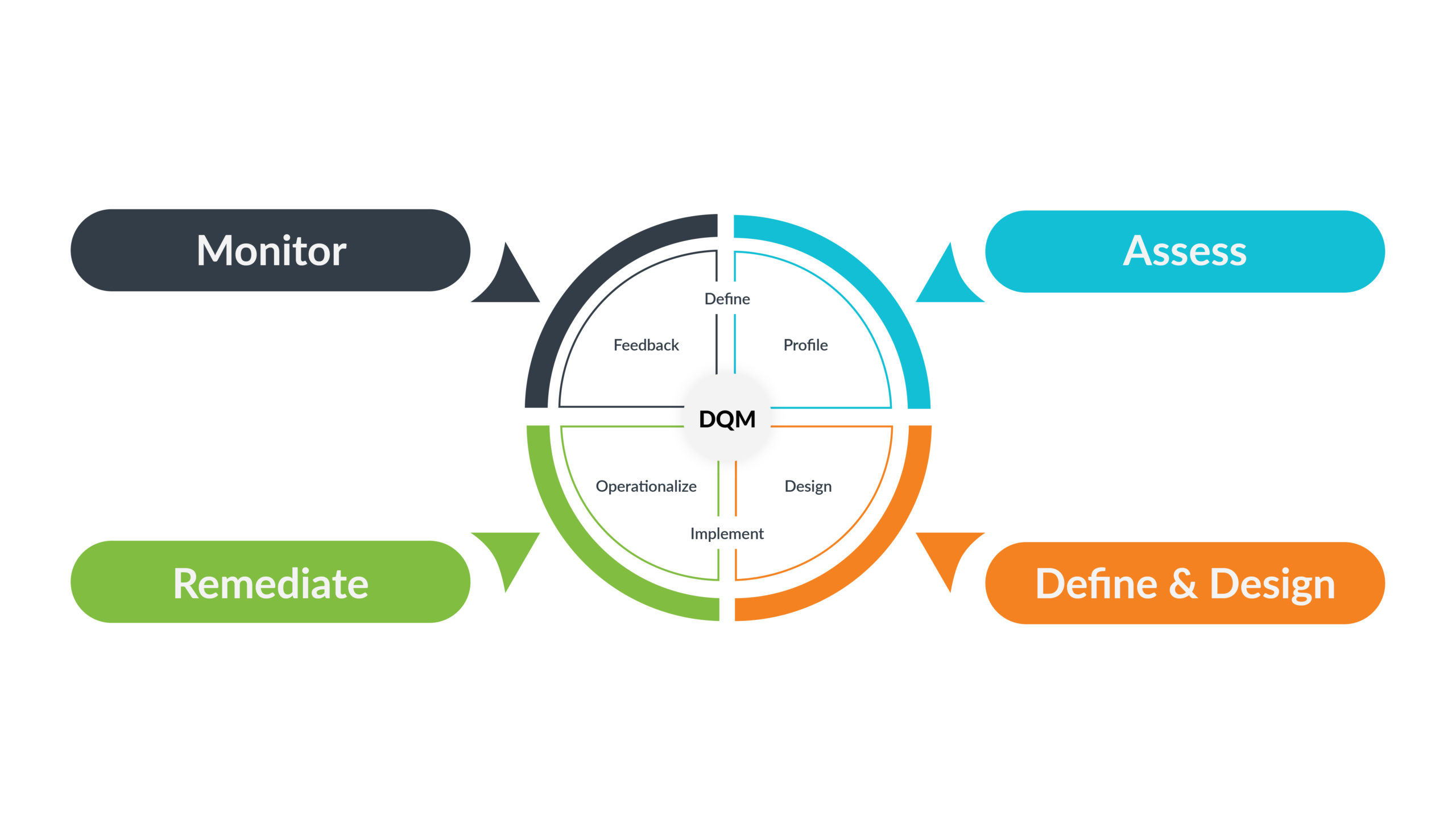

DQM in an enterprise is a continuous journey. To be relevant for the business, a regular flow of learning needs to be fed into the process. This is important for improving the results as well as for adapting to the changing nature of data in the enterprise. A continuous process of assessment of data quality, implementation of rules, data remediation, and learning feedback is necessary to run a successful DQM program.

The process of DQM can be depicted with the help of the following diagram –

How does Machine Learning (ML) help in DQM?

To drive these capabilities and accelerate data transformation for an organization, it is extremely important to have a strong DQM strategy. The burden of DQM needs to shift from manual mode to a more agile, scalable and automated process. The time-to-value for an organization’s DQM investments should be minimized.

Focusing on innovation, not infrastructure, is how businesses can get value from data and differentiate themselves from their competitors. More than ever, time, and how it’s spent, is perhaps a company’s most valuable asset. Business and IT teams today need to be spending their time driving innovation, and not spending hours on manual tasks.

By taking over DQM tasks that have traditionally been more manual, an ML-driven solution can streamline the process in an efficient cost-effective manner. Since an ML-based solution can learn more about an organization and its data, it can make more intelligent decisions about the data it manages, with minimal human intervention. The nature of data in an organization is also ever-changing. DQM rules need to constantly adapt to such changes. ML-driven automation can be applied to greatly automate and enhance all the dimensions of Data Quality, to ensure speed and accuracy for small to gigantic data volumes.

The application of ML in different DQ dimensions can be articulated as below:

- Accuracy: Automated data correction based on business rules

- Completeness: Automated addition of missing values

- Consistency: Delivery of consistent data across the organization without manual errors

- Timeliness: Ingesting and federating large volumes of data at scale

- Validity: Flagging inaccurate data based on business rules

- Uniqueness: Matching data with existing data sets, and removing duplicate data

Fresh Gravity’s Approach to Data Quality Management

Our team has a deep and varied experience in Data Management and comes with an efficient and innovative approach to effectively help in an organization’s DQM process. Fresh Gravity can help with defining the right strategy and roadmap to achieve an organization’s Data Transformation goals.

One of the solutions that we have developed at Fresh Gravity is DOMaQ (Data Observability, Monitoring, and Data Quality Engine), which enables business users, data analysts, data engineers, and data architects to detect, predict, prevent, and resolve issues, sometimes in an automated fashion, that would otherwise break production analytics and AI. It takes the load off the enterprise data team by ensuring that the data is constantly monitored, data anomalies are automatically detected, and future data issues are proactively predicted without any manual intervention. This comprehensive data observability, monitoring, and data quality tool is built to ensure optimum scalability and uses AI/ML algorithms extensively for accuracy and efficiency. DOMaQ proves to be a game-changer when used in conjunction with an enterprise’s data management projects (MDM, Data Lake, and Data Warehouse Implementations).

To learn more about the tool, click here.

For a demo of the tool or for more information about Fresh Gravity’s approach to Data Quality Management, please write to us at soumen.chakraborty@freshgravity.com, vaibhav.sathe@freshgravity.com or sudarsana.roychoudhury@freshgravity.com.

.png)